Original version of This story Appears in Quanta Magazine.

Large language models are so big that they work well. The latest models from Openai, Meta and Deepseek use hundreds of billions of “parameters.” This is an adjustable knob that determines the connection between the data and is adjusted during the training process. With more parameters, the model is more powerful and accurate as it allows better identification of patterns and connections.

But this power costs a lot. Training a model with hundreds of billions of parameters requires enormous computational resources. For example, Google reportedly spent training the Gemini 1.0 Ultra model $191 million. Large language models (LLMS) require considerable computing power each time they respond to a request. This makes it an infamous energy pig. A single query to chatgpt Consumption about 10 times According to the Electric Power Research Institute, there is as much energy as a single Google search.

In response, some researchers are now thinking small ideas. IBM, Google, Microsoft, and Openai have released all recently released small language models (SLMs) that use billions of parameters, which are just a small portion of LLM counterparts.

Small models are not used as such general tools unless they are large. But it excels at certain, narrowly defined tasks, such as summarizing conversations, answering patient questions as healthcare chatbots, and collecting data on smart devices. “For many tasks, the 8 billion parameter model is actually pretty good.” Zico Koltera computer scientist at Carnegie Mellon University. It can also run on a laptop or mobile phone instead of a huge data center. (There is no consensus on the exact definition of “Small,” but all new models make the most of around 10 billion parameters.)

To optimize the training process for these small models, researchers use several tricks. Large models often rub raw training data from the Internet. This data can be organized, messy and difficult to process. However, these large models can generate high quality datasets that can be used to train small models. This approach, known as knowledge distillation, is obtained to effectively pass the training, as is the way students give teachers. “reason [SLMs] When this small model is in very good condition, such small data is that we use high quality data instead of clutter,” Colter said.

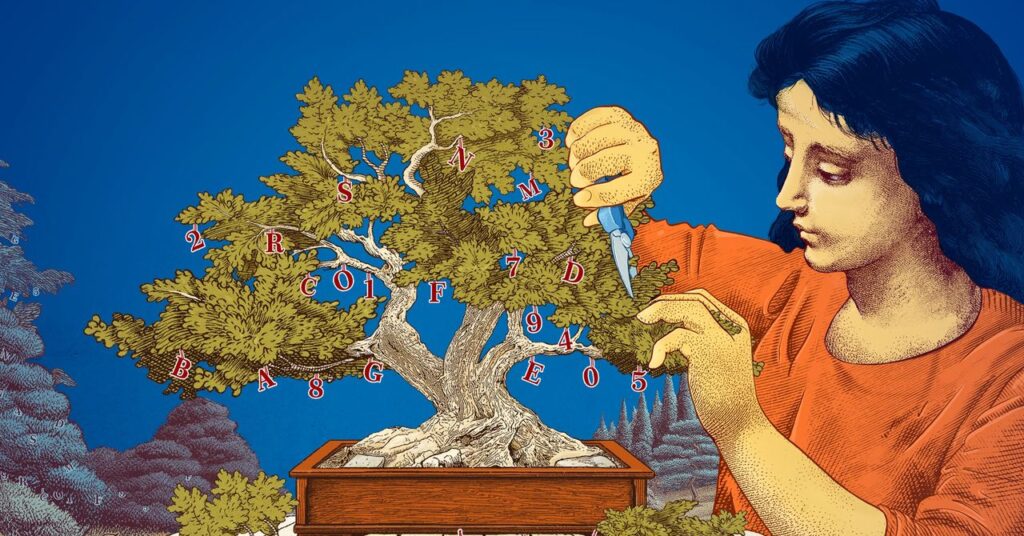

Researchers also explored ways to create small models by starting with larger models and then trimming them. One method known as pruning involves removing unnecessary or inefficient parts Neural Networks– A vast web of connected data points underlying a large model.

Pruning was inspired by the real neural networks that are the human brain. This gains efficiency by carving out connections between synapses as humans. Today’s pruning approach goes back to tracing Paper from 1989 Now in the meta, computer scientist Yann Lecun claimed that it could be removed without sacrificing up to 90% of the parameters of a trained neural network. He called this method “optimal brain damage.” Pruning helps researchers fine-tune small language models for a specific task or environment.

For researchers interested in how language models do, small models offer an inexpensive way to test new ideas. Additionally, the inference may be more transparent as it has fewer parameters than large models. “If you want to create a new model, you need to try things out,” he said. Leshem Choshenresearch scientist at MIT-IBM Watson AI Lab. “The small model allows researchers to experiment with lower stakes.”

Large and expensive models with ever-increasing parameters include generalized chatbots, image generators, and Drug discovery. However, for many users, small target models work the same way, but it makes it easier for researchers to train and build. “These efficient models save money, time and calculations,” Choshen said.

Original Story Reprinted with permission from Quanta Magazine, Edited independent publications of Simons Foundation Its mission is to enhance public understanding of science by covering research and development and trends in mathematics and physical sciences and life sciences.