Cosmos can also generate tokens for each avatar’s movement, which acts like a timestamp used to label brain data. Data labeling allows AI models to accurately interpret and decode brain signals and convert those signals into intended actions.

All this data is used to train the Brain Foundation model, a large-scale deep learning neural network that can be adapted to a wide range of applications rather than needing to be trained on each new task.

“As we acquire more and more data, these basic models become better and more generalizable,” says Shanechi. “The problem is that these foundation models actually require a lot of data to be the basis,” she says, which is difficult to achieve with the invasive techniques that most people receive.

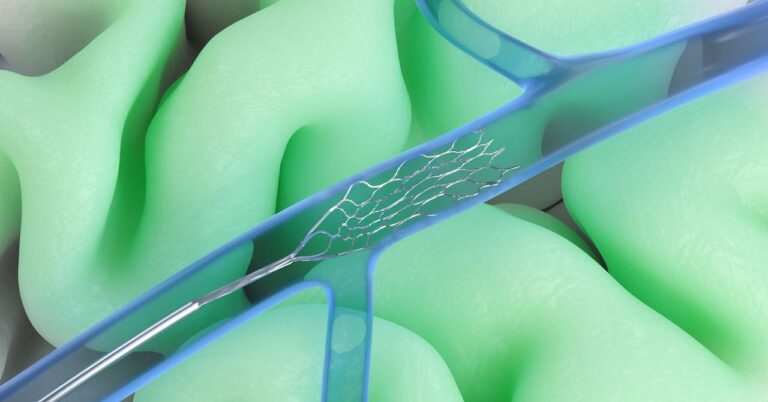

Synchron’s devices are less invasive than many of their competitors. NeuralInk and other companies’ electrode arrays are located on the brain or the surface of the brain. Synchron arrays are mesh tubes that are inserted into the bottom of the neck and pass through the vein to read activity from the motor cortex. A procedure similar to filling a cardiac stent in an artery does not require brain surgery.

“The big advantage here is that you know how to do stents for millions of people around the world. Every part of the world is talented enough to do stents. A regular Caslab can do this. In the US alone, as many as 2 million people receive stents each year, opening their coronary arteries to prevent heart disease.

Since 2019, Synchron has surgically implanted BCIs in 10 subjects and has collected years of brain data from those people. The company is preparing to launch the larger clinical trials needed to seek commercial approval for the device. Due to the risks and cost and complexity of brain surgery, no large-scale trials of implanted BCIs were found.

Synchron’s goal of creating cognitive AI is ambitious and does not come without risk.

“What I think is quickly possible is that this technology could potentially be possible, and there is the possibility that more control can be controlled in the environment,” says Nita Farahoney, a professor of law and philosophy at Duke University, who wrote extensively about BCIS ethics. In the long run, Farrah Honey says that as these AI models become more refined, people can not only detect intentional commands, but also predict or suggest what kind of person they are. Maybe I want to do their BCI.

“To allow people to self-determination through such seamless integration or environments, they need to be able to detect it previously, not just intentionally communicated speech or intentional athletic commands,” she says.

It enters the realm of stickiness about how much autonomy a user has and whether AI is consistently acting on individual desires. It then raises questions about whether BCI can change someone’s perception, thought, or intentionality.

Oxley says these concerns are already arising from generative AI. For example, use ChatGpt for content creation to blur the line between what people create and what AI creates. “I don’t think that issue is particularly special for BCI,” he says.

For those using hands and voice, modifying AI-generated materials like automatic corrections on mobile phones is no big deal. But what happens if BCI does something the user didn’t intend to do? “The user always drives the output,” says Oxley. However, he recognizes the need for some kind of choice that allows humans to override AI-generated proposals. “You always need a kill switch.”